It’s a hyper-modern problem on social media: A video or image of an animal doing something seemingly unbelievable in the wild pops up on your feed, only for you to realize it is, in fact, unbelievable. That’s because many of these images and videos—from wolves befriending house cats to brown bears jumping on a trampoline—are fabricated using artificial intelligence.

But what if fake wildlife images could be used to aid conservation?

That’s the goal of a new effort at the Duke Marine Robotics and Remote Sensing Lab, where scientists are using AI to help fill data gaps in whale monitoring systems. Ecologists and governments are increasingly tapping into AI tools to parse through an abundance of wildlife data, identifying and tracking species movements. But for certain endangered species like the North Atlantic right whale, so few individuals are left that there isn’t enough data to train the AI to identify them.

Duke University professor Dave Johnston, director of the Marine Robotics and Remote Sensing Lab, and doctoral student Henry Sun had a relatively simple idea to solve the problem: Create more images, just like people do on social media.

“Instead of always worrying about the negative aspects of [deepfakes], let’s flip the script. … How do we actually use this to actually make our work better?” Johnston said. In theory, these fake images could help train AI-assisted satellites that track and identify endangered species in the wild to protect them from threats or learn how climate change is affecting them, he said.

A small but growing number of scientists are leveraging generative AI to create synthetic plant and wildlife imagery and audio for data-scarce species in hopes of helping conserve them. But there are downsides to this approach, both in the reliability of the data and the systems themselves.

Artificial intelligence is powered by energy- and water-hungry data centers. As AI use ramps up, scientists are reckoning with how to ensure it helps solve conservation problems rather than fueling them.

Deepfake Whales

Despite being the size of a school bus, North Atlantic right whales are notoriously difficult to find.

Right whales were once common along the eastern coasts of the United States and Canada, but now only around 370 are left. As the waters of this region became busier with ship traffic and fisheries in recent decades, the whales have frequently fallen victim to vessel strikes and entanglements with lobster gear.

Among the efforts to minimize these threats are government-mandated no-fishing areas and slow-down zones for vessels in certain spots throughout the year. But the only way to ensure these steps are effective is knowing where the whales are.

In recent years, the Canadian and U.S. governments have invested millions of dollars in programs to create satellites that help track the whales on their annual migrations, during which some travel more than 1,000 miles. That’s a lot of ocean area to cover, and detecting whales can be difficult with copious amounts of data coming in from the satellites.

This is where AI can come into play. Systems can be trained to identify whales in the ocean and process huge datasets in a fraction of the time it would take humans. It works only if these high-tech whale spotters have enough images as references, Sun said.

“A big problem … is the lack of good-quality training data and, more importantly, the lack of good quantities of training data for such rare species,” Sun said.

Some machine-learning models can learn patterns and identify animals like birds or wolves by their calls and appearance. But for endangered species, data is often scant. To expand a dataset, researchers will typically make tweaks to existing images by cropping, recoloring or flipping them.

This technique can only go so far. Animals in the wild appear in a multitude of positions and appearances depending on the time, their age, coloring and setting. For example, an image of a young whale swimming at the surface off the coast of Florida can look radically different when the same whale is breaching in the dark waters of New England the next season.

While a human biologist may easily be able to identify this animal in both cases, artificial intelligence must be trained on as many of these different circumstances as possible to improve its accuracy. Combining their marine ecology and computer science expertise, Johnston and Sun got to work creating more realistic whale copies using what’s known as a diffusion model.

These generative AI models are trained on billions of pairs of images and their corresponding text caption to “teach” a model to understand how a picture might look based on a certain description. To generate novel content, the model adds random distortions known as noise to an image until it’s unrecognizable, then learns to work backward by removing the noise step by step until a new image is produced. Johnston compares it to a chef identifying a specific ingredient, then making an entirely new dish.

“All of those denoising steps are like when the chef looks at the dish and smells it and tastes it—it’s like, ‘Oh, I know it has basil and it has cumin and it has this in it and it’s slightly charred,’” he said. “A chef can use that information to make a dish and it’s not going to be exactly the same as what would come from the recipe, but it’s going to be an iteration.”

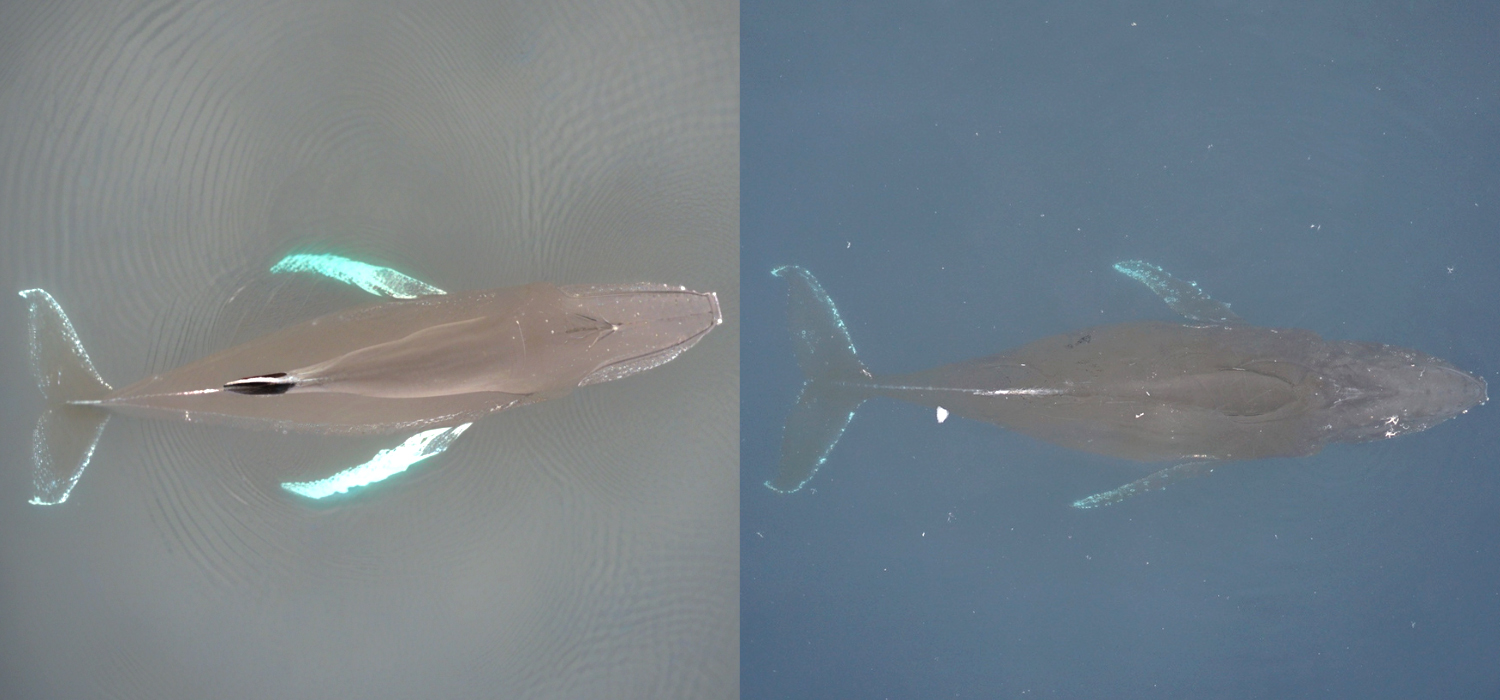

The researchers started with several pre-trained diffusion models, but took it one step further by also training them on a small subset of right whale images, a process known as fine tuning. The system was then able to create more than a thousand images of whales in various positions and settings. The researchers also created several images of humpback whales for comparison.

While many of the images closely resembled real animals, the AI also produced some wonky copies, a common problem for AI tools. For example, one of the whales had two tails—and no head.

Overall, though, the researchers say their models successfully tricked Google’s reverse image search tool more often than image-generating AI systems that were not fine-tuned using whale-specific photos. The process is in the experimental phase, and was inspired by a broader collaboration between Duke University and several Canadian organizations—including the Canadian Space Agency, Fisheries and Oceans Canada, the University of New Brunswick and the environmental consulting group Hatfield Consultants—to build a space-based detection system for North Atlantic right whales.

The Duke team is working on a paper containing the results from their test, which Sun said could eventually be applied to other endangered species.

Mimicking Bird Calls, Fighting Weeds

The scientists at Duke University are not the only researchers experimenting with AI to help augment biodiversity data.

In recent years, scientists have developed complex AI systems such as BirdNET and Merlin to help identify birds by their calls. Similar to the right whales, though, far less data is available for rarer species. To help fill in the gaps, experts at the University of Moncton in Canada developed a deep-learning AI tool called ECOGEN that can generate realistic bird sounds to supplement samples of rare species. These synthetic songs can be fed into audio identification tools used for ecological monitoring.

“Essentially, for species with limited wild recordings, such as those that are rare, elusive, or sensitive, you can expand your sound library without further disrupting the animals or conducting additional fieldwork,” Nicolas Lecomte, a professor at the University of Moncton who helped develop the model, said in a press release.

In a 2023 study, Lecomte and a team of researchers found that adding artificial sounds generated by ECOGEN improved bird-song classification accuracy by 12 percent on average. Other labs are experimenting with similar text-to-audio models for avian conservation, but the field appears to be in its early stages.

As such, some researchers, such as Irina Tolkova, a postdoctoral fellow at Cornell University’s K. Lisa Yang Center for Conservation Bioacoustics, are not yet confident in applying this type of approach to their work.

“I think the challenge with AI methods is that they’re almost always more black box and not very interpretable, so if there is something unexpected, then you might just miss it,” she said. “I personally would still be a bit hesitant to trust a model that’s kind of generating new sounds from a very small sample of an endangered species.”

In other cases, researchers are using generative AI that may be able to target invasive species such as leafy spurge, which causes up to $45 million in damages each year to U.S. beef and hay production and is becoming more common with climate change. The toxic weeds spread rapidly across ranchlands in the Midwest and Mountain West, where they can poison cattle and other animals. Ranchers in Montana are currently working on an AI-assisted drone program to uncover leafy spurge before it can wreak too much havoc on their lands, but not much satellite imagery exists of the toxic weed to train their systems.

This story is funded by readers like you.

Our nonprofit newsroom provides award-winning climate coverage free of charge and advertising. We rely on donations from readers like you to keep going. Please donate now to support our work.

Donate NowSo computer scientists at Carnegie Mellon University teamed up with the ranchers to create synthetic images of leafy spurge, including samples in different weather conditions—from a wintry snowstorm to a spring bloom. That helps AI monitoring systems better detect the weed—and avoids the costly and cumbersome task of gathering data in the field, according to Ruslan Salakhutdinov, a professor at CMU’s School of Computer Science.

“This is one of the examples [of] real-world application where real data is very hard to get, but using generative AI technology … we can generate these instances across many, many different configurations,” he said. Including the synthetic spurge images in the AI system improved detection significantly, according to a 2025 paper authored by Salakhutdinov and his team.

This generative AI approach could be used in other settings where it’s difficult or dangerous to gather data, including rural areas around the world where there may not be enough funding to send out teams, Duke University’s Sun said.

“if you can supplant gaps, maybe you can reduce the field burden … and that saves time and resources,” he said.

An Artificial Intelligence Catch-22

But artificial intelligence is a double-edged sword in the environmental research world, contributing to the very problems ecologists are trying to address by using vast amounts of energy and water. A not-yet-peer-reviewed 2024 study by researchers at the AI startup Hugging Face and Carnegie Mellon University found that generating just one image using certain AI models can require as much energy as fully charging your smartphone.

When the energy to power AI comes from fossil fuels, these cumulative queries worsen global warming, a leading threat to wildlife and plants around the world. Right whales face mounting risks from warming waters, which are reducing the availability of copepods—the marine giant’s favorite food—in the Gulf of Maine. As a result, the whales are chasing the copepods into new areas where they have little to no protection.

Meanwhile, AI requires a great deal of water to cool the hardware required to operate it. A “hyperscale” data center can use as much as 365 million gallons a year. Though the total impact of water usage is unclear, experts have expressed concerns about how this might affect wetland ecosystems, particularly in the Great Lakes region of the U.S. The environmental impact of AI systems for ecological research is far less than that of big technology companies. But scientists are still wrestling with this dilemma.

“As a young person who really wants to feel like I’m making a positive contribution, it becomes difficult to grapple with a lot of these sort of cost-benefit-analysis kind of decisions,” Sun said. “I’m training this model that uses this amount of energy, but we have this benefit of maybe being able to better manage these whales, or, in this case, develop a tool that could result in conservation. Does that outweigh the harms? It’s difficult to say.”

Kasim Rafiq, a wildlife biologist at the University of Washington, has similar thoughts on AI, use of which, he says, is just starting to ramp up in the ecology field. He is working on a project that uses AI to help predict how changing environments, such as habitat loss and long-term global warming, impact animal behavior.

“You’ve got all these benefits, but then you also have the huge energy and data infrastructure costs, which have this environmental impact, which puts you in this catch-22 situation,” Rafiq said. One way to mitigate this, he said, is using AI only when it is beneficial and necessary, and with systems that require fewer resources.

“We talk about the need to develop more energy-efficient and smaller models, which could potentially be run on researcher computers, as opposed to needing huge data centers,” Rafiq said.

And in the case of whales, deepfake clones aren’t always used for conservation purposes. In 2023, Gizmodo reported that a fossil-fuel-funded think tank, the Texas Public Policy Foundation, sent out an email with what seemed to be an AI-created image of a dead whale lying on a beach in front of a wind farm.

The group has tried to discredit offshore wind by claiming it kills whales—a right-wing talking point that scientists say there’s no evidence to support. The Texas Public Policy Foundation did not respond to multiple requests for comment.

Despite the AI downsides, a group of scientists wrote in a 2025 paper that it has the potential to “revolutionize conservation.” They see numerous ways to use AI that could help stem biodiversity loss, from monitoring the wildlife trade to projecting how climate change could disrupt animal habitat.

Cornell University’s Tolkova has some reservations but is overall “very open-minded.”

“I like to hope that there’s a lot that machine learning can do that isn’t just useful for creating chatbots, but also for meaningful conservation impact, for sustainability and so on,” she said. “I think it’s worth exploring those opportunities.”

About This Story

Perhaps you noticed: This story, like all the news we publish, is free to read. That’s because Inside Climate News is a 501c3 nonprofit organization. We do not charge a subscription fee, lock our news behind a paywall, or clutter our website with ads. We make our news on climate and the environment freely available to you and anyone who wants it.

That’s not all. We also share our news for free with scores of other media organizations around the country. Many of them can’t afford to do environmental journalism of their own. We’ve built bureaus from coast to coast to report local stories, collaborate with local newsrooms and co-publish articles so that this vital work is shared as widely as possible.

Two of us launched ICN in 2007. Six years later we earned a Pulitzer Prize for National Reporting, and now we run the oldest and largest dedicated climate newsroom in the nation. We tell the story in all its complexity. We hold polluters accountable. We expose environmental injustice. We debunk misinformation. We scrutinize solutions and inspire action.

Donations from readers like you fund every aspect of what we do. If you don’t already, will you support our ongoing work, our reporting on the biggest crisis facing our planet, and help us reach even more readers in more places?

Please take a moment to make a tax-deductible donation. Every one of them makes a difference.

Thank you,